The Data Platform Isn't a Stack—It's a System

Where Is Everyone?

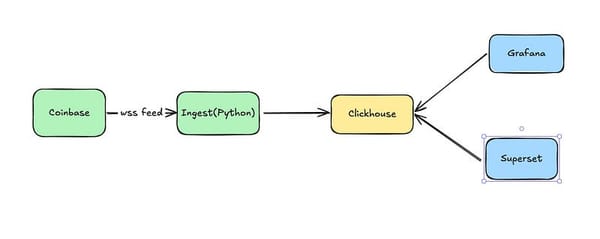

I was flummoxed. I'd done exactly what was asked of me - and the tech worked. We'd built a data platform for a finance company using Apache Kafka and a shiny new Kappa architecture. It had buy-in, it had features, and I was genuinely excited to launch it.

Some of the work was hard - especially securing the platform - but we got it done. This was the stack everyone was talking about. It was modern, scalable, real-time. It was going to change how the firm handled data.

Apparently, I was the only one excited.

We had a few adopters - the equities desk even saved some money by migrating their data feeds, which felt like a win. But across the rest of the company? Crickets.

In hindsight, the failure was obvious. I had a clear vision - validated by blog posts and conference talks - and I sold it well. I talked to engineers across teams and got their buy-in.

But here's the rub: I already knew what I wanted to build.

I didn't ask people what problems they were trying to solve. I brought them the solution, framed in terms of what was hot in the ecosystem, and they nodded along. Especially in equities, where I'd worked for five years and knew the problems intimately. But in other areas? I missed the mark.

There were warning signs - questions about problems that didn't fit my model - but I rationalized them. I found ways my platform could solve them, so I moved on.

The launch landed with a thud. I had succeeded as an engineer - but failed as a partner. I built something technically sophisticated, but misaligned with the business. I checked all the boxes, but didn't understand the need behind them.

It wasn't that I ignored requirements. In some areas, like security, I got it right because I took the time to understand what was truly needed. But for the platform as a whole, I never stopped to ask: What problems are we really trying to solve?

I'm Not Alone

One of the most persistent problems in data engineering is that we often fail to tie our work to the actual goals of the business. The classic symptom is a dashboard that no one uses.My data platform was a more expensive and less visible example of the same class of failure. Defining a model that binds data platform implementation to business needs can help avoid that, while fostering better collaboration between technical and business partners.

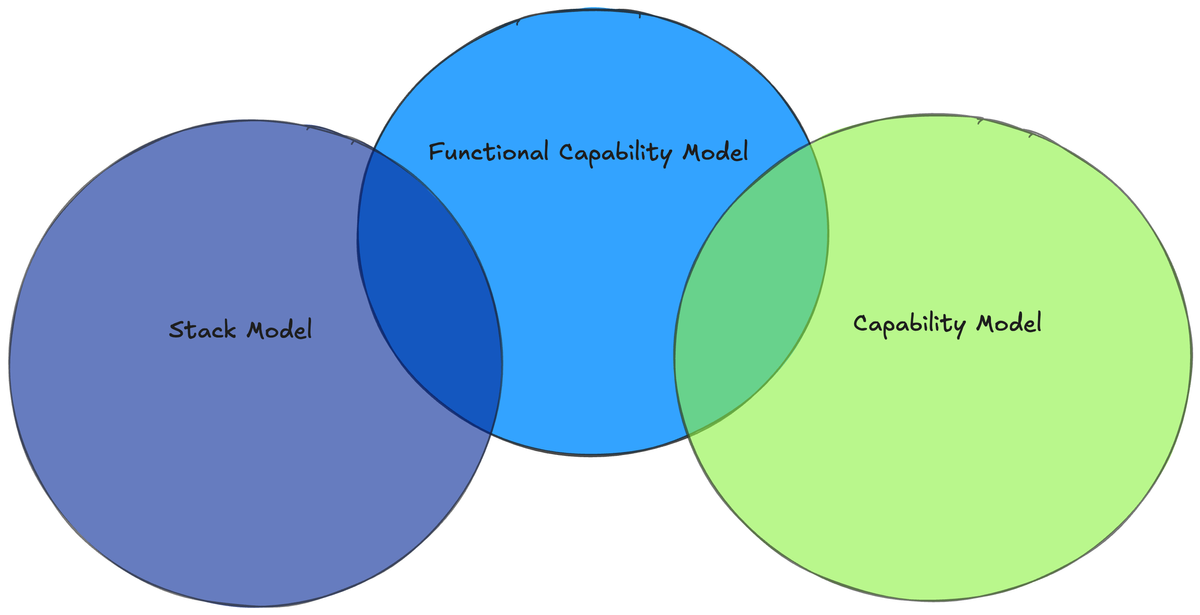

We often describe platforms by the components they’re made of, or in terms of broad capabilities. These models are useful for orientation and sanity checks—but not for designing systems that fit the real needs of a specific business.

As vendors consolidate and tools overlap, it’s more important than ever to think clearly about what our organization actually needs. Every vendor makes different compromises. To choose wisely—and build something that lasts—we need to move beyond generic models.

Component Model

Over the last couple of years the industry has rallied around a layered stack model of the data platform—a set of well-defined technical components intended to cover the full data lifecycle.

What It Is

IBM defines a data platform as

... a technology solution that enables the collection, storage, cleaning, transformation, analysis and governance of data. Data platforms can include both hardware and software components. They make it easier for organizations to use their data to improve decision making and operations.

They then go on to define the 5 layers of the data platform

- Data Storage

- Data Ingestion

- Data Transformation

- BI and Analytics

- Data Observability

Monte Carlo builds directly on IBM’s five layers, offering more implementation detail and layering in optional, yet valuable layers like orchestration and governance.

Over the last 6-12 months we've also seen a great deal of consolidation in the space of data engineering tools as the leading players attempt to reposition from owning one or two layers of this stack to providing the full offering.

Where It Falls Short

Curiously, one capability not listed — access control — is often the one that vendors withhold from open-source or free-tier offerings. That alone raises the question: if the model overlooks something so central to how value is captured, how complete can it really be?

The layer approach to data architecture also fails to address critical industry-specific requirements. For example, healthcare organizations must maintain HIPAA compliance across their entire data ecosystem—a requirement easily overlooked when focusing solely on components. While adding a governance layer might address such requirements, this solution remains uncertain and potentially inadequate. Furthermore, each architectural layer described in the components model represents significant complexity; even the seemingly straightforward data storage layer encompasses diverse implementations including traditional data warehouses, data lakes, and modern lakehouse architectures.

Further, as vendors consolidate and attempt to be all-in-one data platform solutions, it is increasingly the case that different parts of a data stack offer repetitive capabilities. For example DBT offers data lineage, so does DataHub, so does Snowflake. Each offers different depth, context, and assumptions, which matters when you are trying to reason about lineage in production. It is entirely reasonable that you would have all three of those components in a mature data stack, so which one becomes authoritative?

The real danger of the component model is that it leads to checkbox thinking - if we build these layers, then we're done. In reality we can build all of the above layers, build them well and still completely miss on serving the data needs of the organization.

Capabilities Model

Another way to think about a data platform is the capabilities approach or, what does a data platform do? Dylan Anderson of "The Data Ecosystem" moves in the direction of defining a data platform from a capability perspective with his definition

It should make sense of data

He also goes a bit farther to talk about data platform capabilities in that it

Ingests Data – It brings data in from internal and external sources

Integrates Data – Helps align/ clean the data into one storage platform

Stores the Data – Structures the data in a storage layer that is easy to use

Processes & Transforms – Enables changes to the data to fulfil business needs

Manages the Data – Ensures the quality of data assets

Secures Data – Guarantees data is safely and securely managed, accessed and used

Serves Curated Data – Provides customised data sets for specified use cases

Provides Insights – Facilitates better decisions through data insights

This approach moves in the direction of defining what we need out of a data platform, but it lacks clarity on business requirements. It doesn't give us quite enough to create an architecture or evaluate our platform choices.

Why It Still Misses

Like the layers model, the capabilities model becomes a bit blurry as vendors push into adjacent spaces through either build or acquisition. In the case of DBT for example the same component whose core offering is to 'process and transform' has also moved into 'manages the data'.

Finally the capabilities model commits the same fundamental error as the layers model. It doesn't tie capabilities back to specific business needs. Using this model as is doesn't allow a technologist to have a meaningful conversation with their business partners about what they are doing and why.

Functional Capability Model

The above models are fine for getting a broad idea of what a data platform should do, but when it comes to implementing a platform or making purchasing decisions we need to think more precisely about what a particular organization needs to do with data, rather than just what layers are present. We can categorize that a bit further into the following questions

-

What capabilities are needed today?

-

What capabilities are we likely to need in the future?

-

What tools implement those capabilities, and how can we integrate them over time?

What It Looks Like In Practice

Capabilities should be treated as a roadmap, not a blueprint. Some are critical on day one; others may become important only as the business scales or regulatory requirements change. Revisiting capability maps quarterly or after major product shifts keeps the platform aligned. Unlike the layers model—which serves as a blueprint—the functional capability model acts as a living roadmap. The models are complimentary, not in conflict.

The key here is to think about capabilities in terms of need to support our particular business. For example, in a healthcare company some required capabilities could be:

- Restrict disclosure on patient request

- Create Safe Harbor compliant data for testing

- Log access to ePHI

Similarly a public company will require

- Track changes to ETL/ELT pipelines

- Track data changes

- Ensure no single person can ingest, transform, approve, and report data without oversight

- Maintain immutable access logs

Where a consumer finance company will need

- Detect anomalies

- Enforce least privilege access

While all of these capabilities bear similarities in that they are also focused on protecting consumers, and all fall broadly into 'governance', they are different enough that relying too heavily on a high-level model could leave the business exposed—either though non-compliance or simply being underpowered.

A Familiar Idea, With a Different Focus

Functional capabilities aren't a new idea. In fact, it's a return to first principles: define the problem, then design the system. Reframing it explicitly as a functional capability model helps cut through vendor narratives and architecture cargo cults. We stop chasing features, and start building platforms that deliver value.

If you've worked in enterprise architecture, this may sound familiar—it echoes the technical capabilities model used in frameworks like TOGAF. The difference is scope and intent. Where traditional capability models often serve long-range planning in large enterprises, this framing is built for today’s data teams, operating in faster cycles with shifting needs. It’s about applying the right level of rigor to design something that fits—without over-engineering or over-buying. Unlike traditional enterprise architecture models, which often sit at a strategic planning layer, this framing is meant to be tactile and immediate—a way to directly inform platform decisions on the ground for fast-moving teams.

Putting Functional Capabilities Into Practice

Using a functional capabilities model asks data platform engineers to do something we’ve traditionally struggled with: deeply understand what the business does. Of course, identifying the right capabilities isn't trivial. It requires engaging with business stakeholders, surfacing assumptions, and making tradeoffs. But doing this early prevents far more expensive rework later—and it’s a skill data teams increasingly need to develop.

That understanding allows us to define the right capabilities—in partnership with the business—and then map those capabilities to tools. This gives us confidence we have coverage where it matters. Mapping capabilities to tools also raises real integration challenges. Two tools may meet the same need differently, with different tradeoffs in extensibility, interoperability, or governance.

These tensions already existed, but being capability-first at least makes them visible.

Of course evaluating tools against capabilities is a deep topic on its own. I won't explore that here beyond saying that decisions should emerge from clearly articulated needs, rather than checklists.

It also unlocks a powerful constraint: the ability to say no. Not every capability is equally valuable. When mapped explicitly, we can make informed decisions about what to invest in—and what to skip or postpone—based on business value.

For CTOs and CDOs, this creates a shared language to justify decisions in terms business stakeholders can engage with. For engineers, it creates context, clarity, and autonomy. We no longer work in a vacuum—we work in a system that makes sense.

It takes the choice between Snowflake, Databricks, and MotherDuck out of the realm of technical nuance and into the realm of sound business decisions.

You don’t need to throw out the layers model. But you do need to stop treating it as a roadmap. Start with functional capabilities—and build a platform that fits.